When working with corpora it is sometimes useful to be able to generate random samples from corpus results for manual analysis (e.g. to determine distribution percentages or recall/precision of queries). BNCweb, CQPweb or (No)SketchEngine provide a thin function for this purpose. However, if the results of corpus queries are only available as text files, there is a random thinning option available as part of GNU coreutils. The examples below create a random sample of 100 lines (adapt sample size according to your project’s needs). The reliability of manually checked results can be improved by obtaining several samples of 100 lines (typically 2-3) and using averaged scores.

On Linux, there is a very easy straight-forward way to achieve this (type: man shuf for details):

cd path_to_text_file

shuf -n 100 results.txt

In order to save the random sample into a new text file, specify an output file:

shuf -n 100 -o random_sample.txt results.txt

On Mac OSX, it is slightly more complicated, as a Linux-like package manager (e.g. Homebrew) and the coreutils package have to be installed first (gshuf Tutorial OSX and corresponding random_sample.zip for novice users who are not familiar with OSX terminal). Once the gshuf command is available, the invocation is anologous (type: man gshuf for details):

cd path_to_text_file

gshuf -n 100 results.txt

In order to save the random sample into a new text file, specify an output file:

gshuf -n 100 -o random_sample.txt results.txt

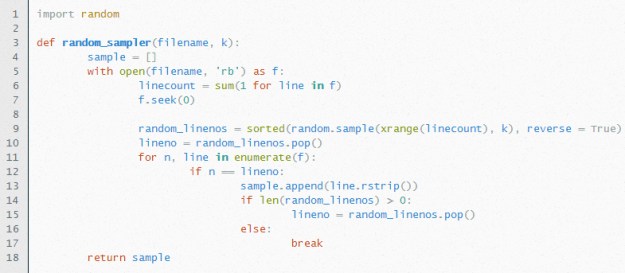

On Windows, the following Python code snipped could be used to achieve a similar result (please let me know if there are any built-in options):

Source: http://metadatascience.com/2014/02/27/random-sampling-from-very-large-files [accessed: 31/05/2015]